producer.sh View the log in Stream DashboardĪfter waiting for some seconds, you will see that log was pushed to Elasticsearch and showing on Kibana. version: "3.9"ĮLASTIC_PASSWORD: &elastic-password helloworldĮLASTICSEARCH_HOSTS: ELASTICSEARCH_USERNAME: kibana_systemĮLASTICSEARCH_PASSWORD: &kibana-password kibanahelloworld~

The Elastic Search service exposed endpoint on port 9200 and Kibana exposed on port 5601. After that, we will get a ready-made solution for collecting and parsing log messages + a convenient dashboard in Kibana.In this docker-compose.yml file, i defined 2 services, es and kibana, they was depoyed to a bridge network so they can communicate by service name. For example, to collect Nginx log messages, just add a label to its container: co.elastic.logs / module: "nginx"Īnd include hints in the config file. We launch the test application, generate log messages and receive them in the following format: 'įilebeat also has out-of-the-box solutions for collecting and parsing log messages for widely used tools such as Nginx, Postgres, etc. Docker Kubernetes Cloud Foundry Step 2: Connect to the Elastic Stack edit Connections to Elasticsearch and Kibana are required to set up Filebeat. Defining input and output filebeat interfaces: filebeat.inputs: First run the below command: docker run -d -p 80:80 nginx -v /var/log:/var/log -name mynginx Now run the below command to collect logs from mynginx container as shown below: docker run -d -volumes-from mynginx -v /config-dir/filebeat.yml:/usr/share/filebeat/filebeat.yml -name myfilebeat /beats/filebeat:5.6. Creating a volume to store log files outside of containers: docker-compose.yml version: "3.8"

We need a service whose log messages will be sent for storage.Īs such a service, let’s take a simple application written using FastAPI, the sole purpose of which is to generate log messages. In my opinion, this approach will allow a deeper understanding of Filebeat and besides, I myself went the same way. As part of the tutorial, I propose to move from setting up collection manually to automatically searching for sources of log messages in containers. The installation process of docker compose (stand-alone version) is described in detail below.

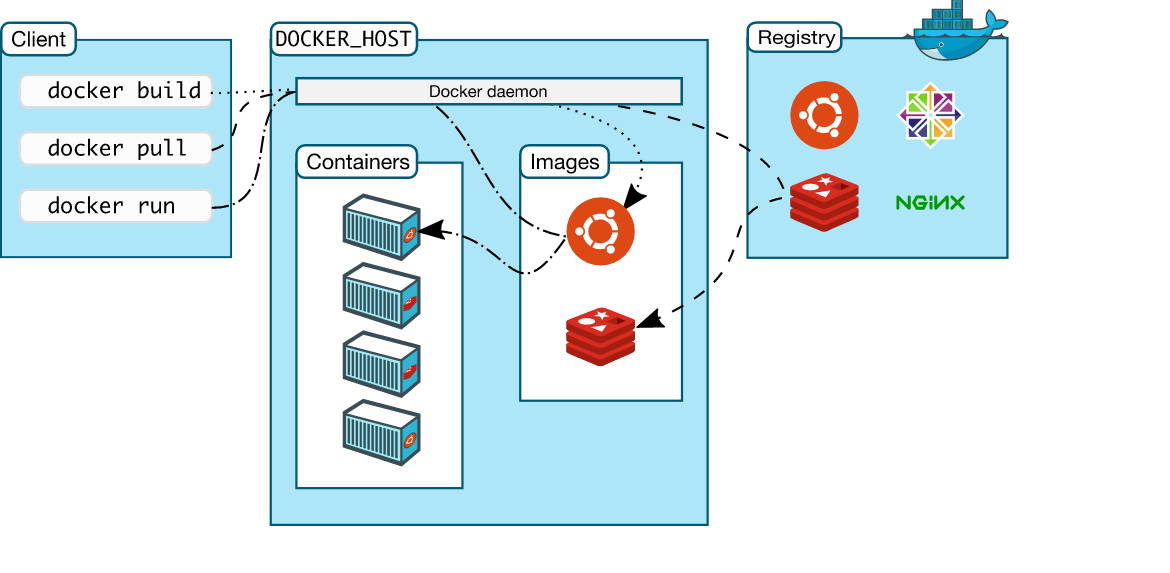

FileBeat is used as a replacement for Logstash. FileBeat then reads those files and transfer the logs into ElasticSearch. Microsoft Azure Cloud Management Docker writes the container logs in files.

0 kommentar(er)

0 kommentar(er)